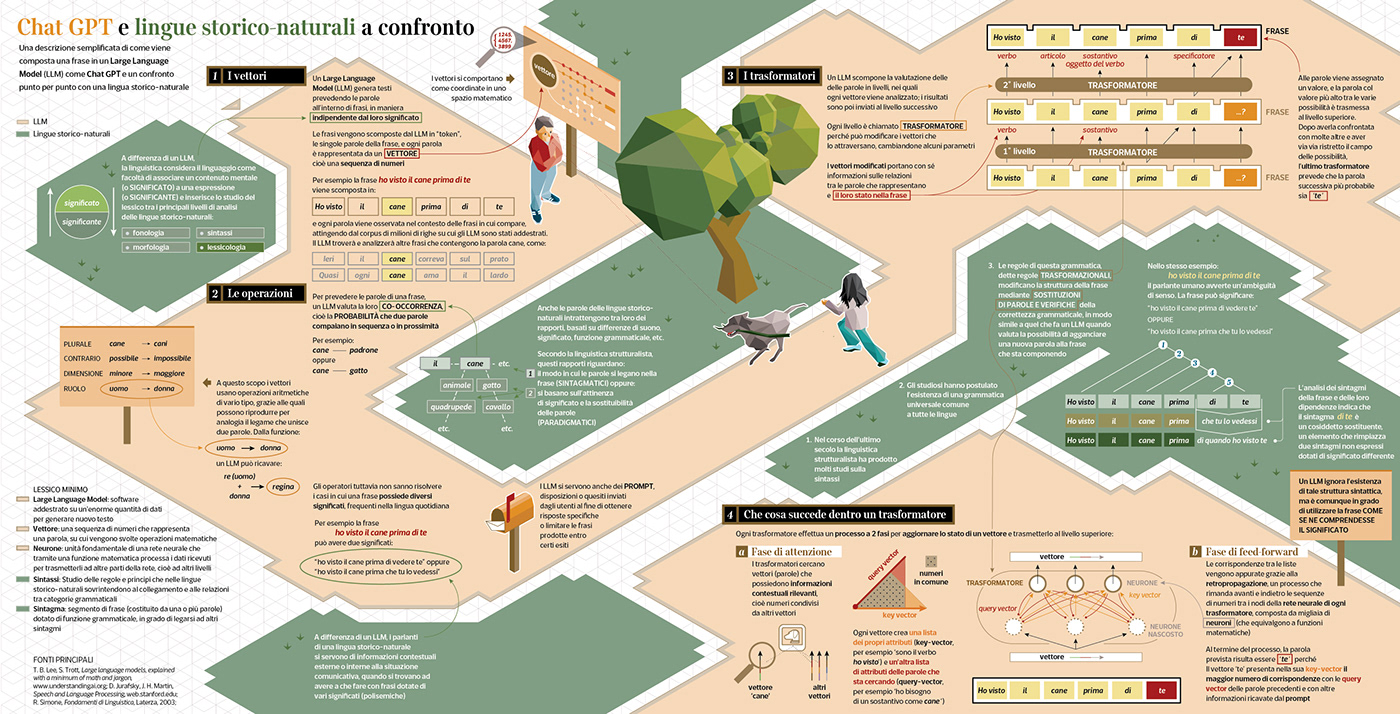

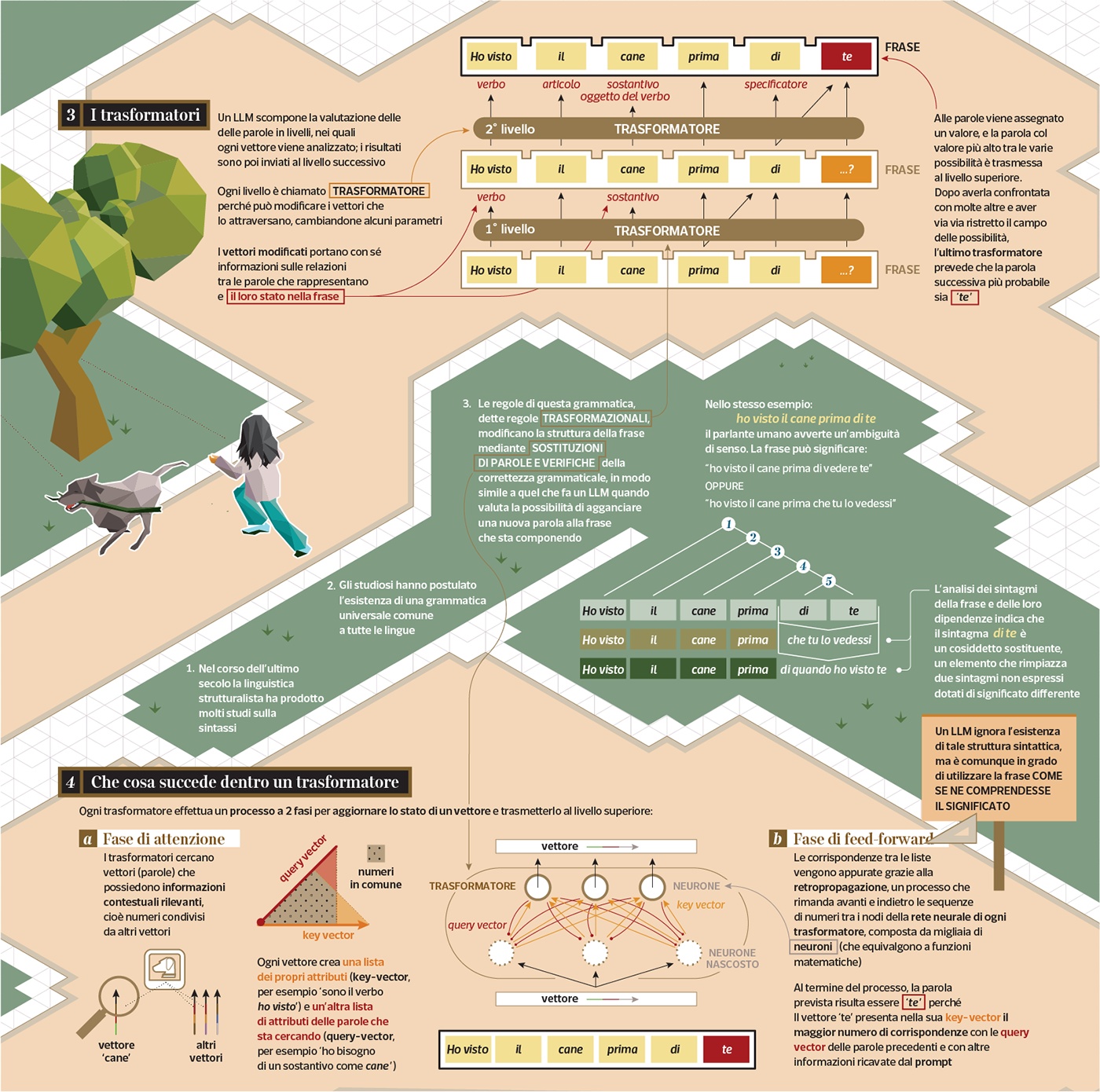

A new visualization on #Linguistics for @la_lettura n.621, this time on the process an LLM undergoes when generating a sentence, and on the same process performed by a human (from the point of view of structuralist Linguistics).

Large Language Models have been in the limelight since the release of ChatGPT, with new models being announced seemingly every month since November 2022. By the time of Chat GPT debut, the usage of generative LLMs thrust into the routine work parties of big companies while conquering the cover pages of all the newspapers around the world (which one by one are now denying permission to train such LLMs on the content they publish).

But few seem to know how an LLM actually churns out words. This infographic primarily tries to fill the apparent lack of information regarding the process that an LLM goes through while producing seemingly human language.

The second goal of this infographic is to set this generative process against natural (human) language, namely the language we speak for everyday use. Generations of scholars forged an array of models for representing how linguistic signs are formed and commanded, finally paying attention to contextual information and nuances of meaning.

For the sake of such a comparison, and due to the space limits of this visualization, only a chunk of general notes on syntax and a specific focus on transformational rules (as they had been conceived by structural Linguistics) have been included.

Without dwelling on different critics (like the well-founded accusations of systematic plagiarism boosted by technological progress), it is important to note how the public debate on the potential of this tool ceased one step away from reawakening a twentieth-century debate on the transmission of meaning through human language, its definition, and nature.

Looking at the various achievements reached by some notable scholars (for example Halliday's systemic functional linguistics, or Wittgenstein's late works), it seems naive to rule out completely that LLMs can use meaning like we do and therefore they would be limited to mimic how humans use meaning.

In his late Philosophical Investigations, Wittgenstein states “In particular, for a large class of cases, although not for all, in which we use the word meaning, it can be defined thus: the meaning of a word is its use in the language; the meaning is a function of use, but of a socially regulated and coordinated use, and not of personal, individual use; knowing the meaning of a sign means knowing the conditions of use of that sign, therefore the meaning is systematic (not at the mercy of individuality) and is in connection with the socio-cultural context”.

So, what does it mean to use meaning, in its most general definition, if not to know how to use words and connect them in such a way as to convey a sense that is recognizable by the community of speakers? And how exactly is the way an LLM uses meaning different from this? The answer likely lies in considering the meaning as purely contextual.